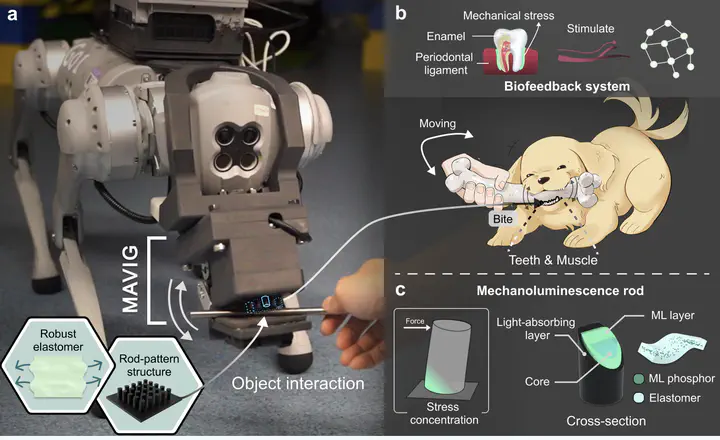

A Bio-Inspired Event-Driven Mechanoluminescent Visuotactile Sensor for Intelligent Interactions

Abstract

Event-driven sensors are essential for real-time applications, yet the integration of current technologies faces limitations such as high cost, complex signal processing, and vulnerability to noise. This work introduces a bio-inspired mechanoluminescence visuotactile sensor that enables standard frame-based cameras to perform event-driven sensing by emitting light only under mechanical stress, effectively acting as an event trigger. Drawing inspiration from the biomechanics of canine teeth, the sensor utilizes a rod-patterned array to enhance mechanoluminescent signal sensitivity and expand the contact surface area. In addition, a machine learning-enabled algorithm is designed to accurately analyze the interaction-triggered mechanoluminescence signal in real-time. The sensor is integrated into a quadruped robot’s mouth interface, demonstrating enhanced interactive capabilities. The system successfully classifies eight interactive activities with an average accuracy of 92.68%. Comprehensive tests validate the sensor’s efficacy in capturing dynamic tactile signals and broadening the application scope of robots in interaction with the environment.