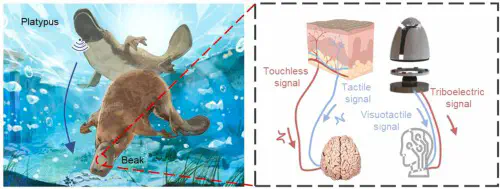

A platypus-inspired electro-mechanosensory finger for remote control and tactile sensing

Abstract

Advancement in human-robot interaction (HRI) is essential for the development of intelligent robots, but there lack paradigms to integrate remote control and tactile sensing for an ideal HRI. In this study, inspired by the platypus beak sense, we propose a bionic electro-mechanosensory finger (EM-Finger) synergizing triboelectric and visuotactile sensing for remote control and tactile perception. A triboelectric sensor array made of a patterned liquid-metal-polymer conductive (LMPC) layer encodes both touchless and tactile interactions with external objects into voltage signals in the air, and responds to electrical stimuli underwater for amphibious wireless communication. Besides, a three-dimensional finger-shaped visuotactile sensing system with the same LMPC layer as a reflector measures contact-induced deformation through marker detection and tracking methods. A bioinspired bimodal deep learning algorithm implements data fusion of triboelectric and visuotactile signals and achieves the classification of 18 common material types under varying contact forces with an ac- curacy of 94.4 %. The amphibious wireless communication capability of the triboelectric sensor array enables touchless HRI in the air and underwater, even in the presence of obstacles, while the whole system realizes high- resolution tactile sensing. By naturally integrating remote contorl and tactile sensing, the proposed EM-Finger could pave the way for enhanced HRI in machine intelligence.